- This is a personal developmental wiki, it may often be in an unstable or experimental state and the lack of meaningful content on a page is often intentional; however, you can participate. Please: 1) create an account, 2) confirm your e-mail, 3) send Deirdre and e-mail telling her who you are and why you are interested. Thanks, --Deirdre(talk • contribs)

Difference between revisions of "User talk:Inductiveload"

m (→Scraper and svn script: fix fishlink) |

(→Readability on a ws page: new section) |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 14: | Line 14: | ||

*[[Python/subst]] a substitution of the same page | *[[Python/subst]] a substitution of the same page | ||

--[[User:Doug|Doug.]]<sup>([[User talk:Doug|talk]] <small>•</small> [[Special:Contributions/Doug|contribs]])</sup> 22:05, 3 December 2011 (EST) | --[[User:Doug|Doug.]]<sup>([[User talk:Doug|talk]] <small>•</small> [[Special:Contributions/Doug|contribs]])</sup> 22:05, 3 December 2011 (EST) | ||

| + | |||

| + | *More links, showing more cooleness: | ||

| + | **[[Deutsch-English Dictionary/Verlag/de]] - the de.wikt entry for "Verlag" | ||

| + | **[[Deutsch-English Dictionary/Verlag/en]] - the en.wikt entry for "Verlag" | ||

| + | **[[Deutsch-English Dictionary/Verlag]] - side-by-side using {{tl|multicol}} and double-scary transclusion | ||

| + | --[[User:Doug|Doug.]]<sup>([[User talk:Doug|talk]] <small>•</small> [[Special:Contributions/Doug|contribs]])</sup> 08:22, 7 December 2011 (EST) | ||

| + | |||

| + | *and more: | ||

| + | **[[Deutsch-English Dictionary/Verlag2]] - This is what happens when you try to use the templates remotely via <code><nowiki>{{prefix:templatename}}</nowiki></code> | ||

| + | **[[Deutsch-English Dictionary/Verlag3]] - This is what happens when you try to use the templates remotely via <code><nowiki>{{raw:prefix:templatename}}</nowiki></code> | ||

| + | --[[User:Doug|Doug.]]<sup>([[User talk:Doug|talk]] <small>•</small> [[Special:Contributions/Doug|contribs]])</sup> 09:44, 7 December 2011 (EST) | ||

== Script for Commons == | == Script for Commons == | ||

| Line 26: | Line 37: | ||

OK, so there are several things I want to do, partly I just want to see models of scraper scripts in general because I've had several occasions I could have used one. But the specific thing I asked about was to copy code from [[fishuser:misza13|Misza's page of Fisheye]]. One of his scripts may itself tell me the general solution as it was used for updating code on botwiki from svn. Misza is inactive but has lots of code and the corner on archive bots. According to a post on his talk page several years ago, all of his code is MIT licensed unless otherwise stated, so I thought to grab it all 1) to preserve it from an account deactivation, 2) to be able to consult to it for models, and 3) if necessary to replace his archive bots.--[[User:Doug|Doug.]]<sup>([[User talk:Doug|talk]] <small>•</small> [[Special:Contributions/Doug|contribs]])</sup> 07:50, 7 December 2011 (EST) | OK, so there are several things I want to do, partly I just want to see models of scraper scripts in general because I've had several occasions I could have used one. But the specific thing I asked about was to copy code from [[fishuser:misza13|Misza's page of Fisheye]]. One of his scripts may itself tell me the general solution as it was used for updating code on botwiki from svn. Misza is inactive but has lots of code and the corner on archive bots. According to a post on his talk page several years ago, all of his code is MIT licensed unless otherwise stated, so I thought to grab it all 1) to preserve it from an account deactivation, 2) to be able to consult to it for models, and 3) if necessary to replace his archive bots.--[[User:Doug|Doug.]]<sup>([[User talk:Doug|talk]] <small>•</small> [[Special:Contributions/Doug|contribs]])</sup> 07:50, 7 December 2011 (EST) | ||

| + | |||

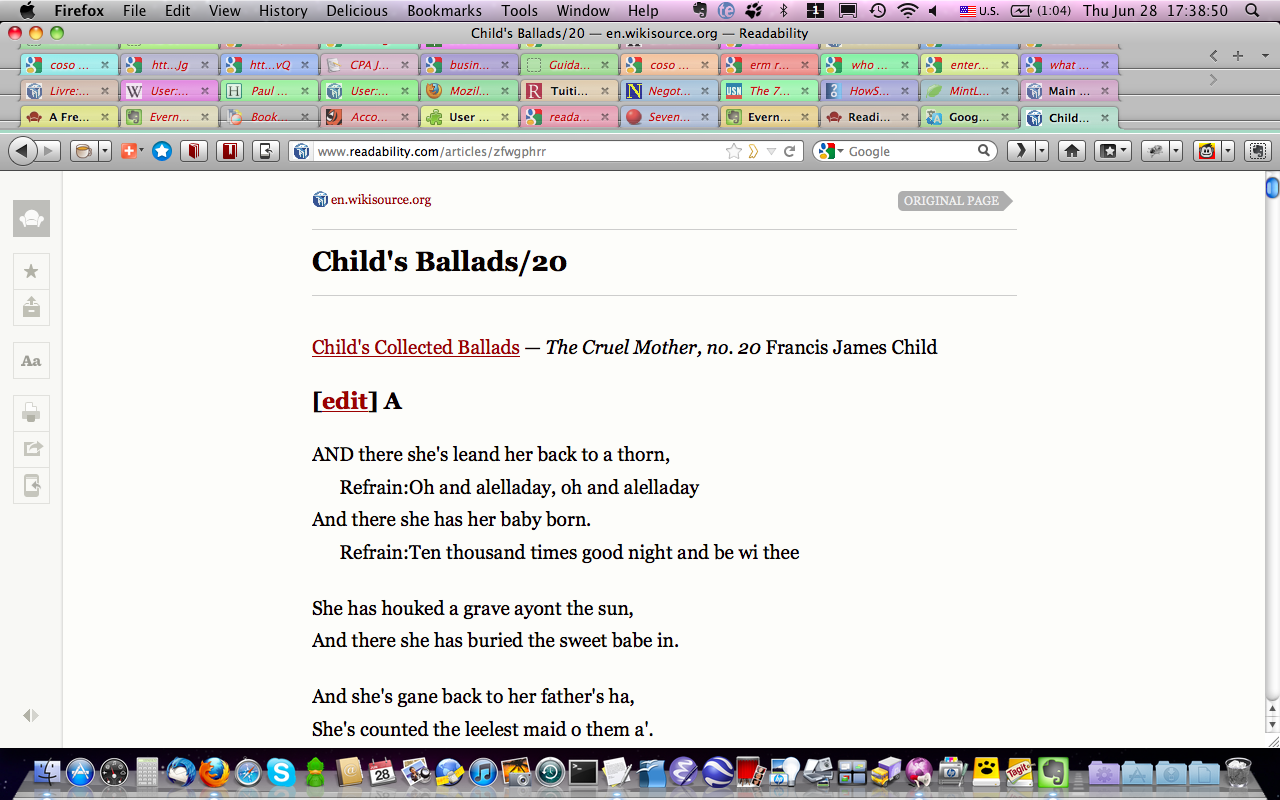

| + | == Readability on a ws page == | ||

| + | |||

| + | [[File:Screen shot 2012-06-28 Readability on enWS.png]] | ||

Latest revision as of 16:48, 28 June 2012

Contents

Success

I succeeded in backing up the database and the file system finally. I installed the most current version of Proofread Page but it failed on update.php so I removed it and installed the version that matches the mw version and after realizing I had to set permissions on all the folders and files created it's up! I haven't created the necessary namespaces , so Proofread Page won't function yet. Let me know anything you think should be changed.--Doug.(talk • contribs) 00:48, 11 October 2011 (EDT)

Now we're cooking with gas

See Special:Version--Doug.(talk • contribs) 18:54, 24 November 2011 (EST)

- See Talk:Main_Page for updates on what's installed and what's working and what's not--Doug.(talk • contribs) 16:00, 27 November 2011 (EST)

ZOMG! Scary Transclusion rocks!

Check out:

- Python a transclusion of a non-mediawiki wiki

- Python/raw a transclusion of the code from the same page

- Python/subst a substitution of the same page

--Doug.(talk • contribs) 22:05, 3 December 2011 (EST)

- More links, showing more cooleness:

- Deutsch-English Dictionary/Verlag/de - the de.wikt entry for "Verlag"

- Deutsch-English Dictionary/Verlag/en - the en.wikt entry for "Verlag"

- Deutsch-English Dictionary/Verlag - side-by-side using {{multicol}} and double-scary transclusion

--Doug.(talk • contribs) 08:22, 7 December 2011 (EST)

- and more:

- Deutsch-English Dictionary/Verlag2 - This is what happens when you try to use the templates remotely via

{{prefix:templatename}} - Deutsch-English Dictionary/Verlag3 - This is what happens when you try to use the templates remotely via

{{raw:prefix:templatename}}

- Deutsch-English Dictionary/Verlag2 - This is what happens when you try to use the templates remotely via

--Doug.(talk • contribs) 09:44, 7 December 2011 (EST)

Script for Commons

Hey, on a separate issue, I need a script for adding a template to a series of jpgs modeled on the one you did for More. Only difference is this one has only 3 digits to the page names rather than the 4 in More's Utopia. I started trying to do it myself but got stuck on how to get the page number out of the middle of the page name. I will keep trying but I think I need to spend more time on basics before I can do this myself. The exact case I'm looking to do is commons:Category:Nietzsche's Werke, I, there are only three files there now but there are several hundred that need to be. I was just going to recat them but then I thought to {{book}} them and then I though, well, I really ought to put the navigation in, so I created Commons:Template:Nietzsche's Werke, I, but then realized I needed to bot in not only the template but the parameters for the page numbers, at which point I realized that a simple replace.py wouldn't work unless I made at least two runs (the first subst'ing pagenames and the second removing the non-numeric parts), which I recognize as silly.

- BTW, along the way I discovered a couple of pybot bugs, the first being that -titleregex only works in the mainspace (I submitted a bug) and the second being a problem with dotall and multiline when used in user-fixes.py--Doug.(talk • contribs) 05:50, 7 December 2011 (EST)

- I placed the first 21 pages in the category using the autocatting template without the navigational parameters as part of my commons bot request. I will simply replace these with a new one with parameters once I know how to do that.--Doug.(talk • contribs) 07:42, 7 December 2011 (EST)

Scraper and svn script

OK, so there are several things I want to do, partly I just want to see models of scraper scripts in general because I've had several occasions I could have used one. But the specific thing I asked about was to copy code from Misza's page of Fisheye. One of his scripts may itself tell me the general solution as it was used for updating code on botwiki from svn. Misza is inactive but has lots of code and the corner on archive bots. According to a post on his talk page several years ago, all of his code is MIT licensed unless otherwise stated, so I thought to grab it all 1) to preserve it from an account deactivation, 2) to be able to consult to it for models, and 3) if necessary to replace his archive bots.--Doug.(talk • contribs) 07:50, 7 December 2011 (EST)